Why hide your website from search engines and users?

The reasons to hide their website from search engines and users are as follows:

- Unfinished websites — At the stage of development and testing, it is better to keep your website private to avoid inappropriate indexing.

- Restricted websites — For invite-only website development, it is better to avoid search engine indexing.

- Test accounts — At the final stage of web development (testing), keeping the website private and avoiding search engine indexing is essential.

Want to hide your website from search engines? We’ve prepared the tutorial you can try yourself.

Password

If you want to ensure that Google or other search engines won’t access your website during its development or testing, you’d better secure it with a password. And remember to keep it a secret from others to prevent unsanctioned data usage.

If you still need to share access to the website with other users, feel free to provide them with a direct link and login data. Don’t be afraid since search engines won’t be able to index these data.

How to Protect Your Website Using .htaccess

For website protection with password you’d better use .htaccess. Here is one way to do it:For example, you can place it as follows:

1. Step One: Make a .htpasswd File

First, open the text editor and add a line with your username and password separated by a colon.

Second, you should encrypt the password in a particular format for password protection using either the htpasswd tool from apache2-utils on Linux or the online tool.

Third, you should use an appropriate format of your .htpasswd file with your unique username and password, as shown below:

webcapitan:$apr1$vz5oywab$qjF1x5.E9xeaCo/385dst0

Save your file as .htpasswd and upload it to your web hosting using ASCII (not BINARY).

/full/path/to/.htpasswd

Do not put this file .htaccess in your visible folder

2. Step Two: Make a .htaccess File

Open the text editor and add any text as shown below:

AuthUserFile /full/path/to/.htpasswd AuthName "Password Protected Area" AuthType Basic <limit GET POST> require valid-user </limit>

Update the AuthUserFile line by entering the full path of your .htpasswd file.

For password personalisation purposes, modify the text in the AuthName line.

You can upload the .htaccess file to the target directory so that it will affect the given directory and all subdirectories.

For example, you can place it as follows:

1. in the root of your virtual host to ensure the entire website security:

/srv/data/web/vhosts/www.mysite.com/htdocs

2. in a particular directory for its protection:

/srv/data/web/vhosts/www.mysite.com/htdocs/myprivatestuff/

How to hide your website from search engines using WordPress Plugins

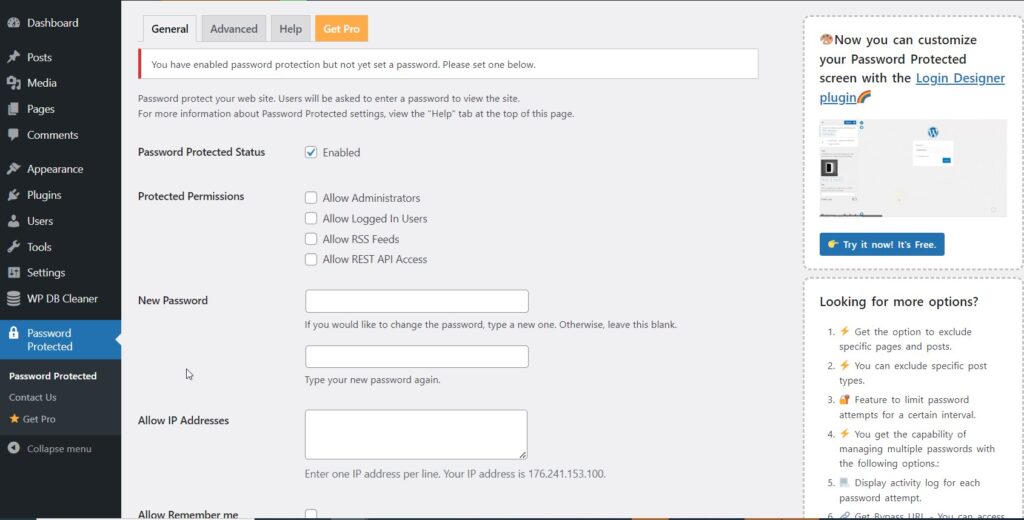

An unlimited world of plugins is available for password protection, but we advise using the password Protected plugin since we have already tested it for flawlessness and clarity.

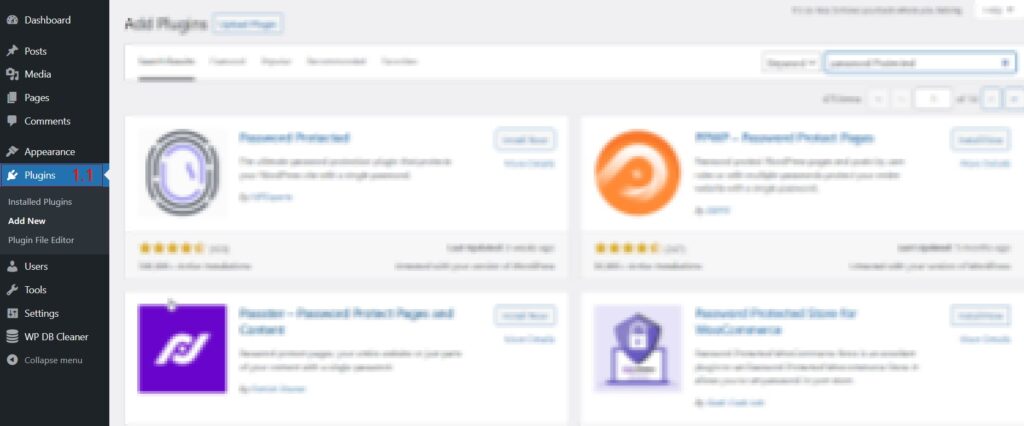

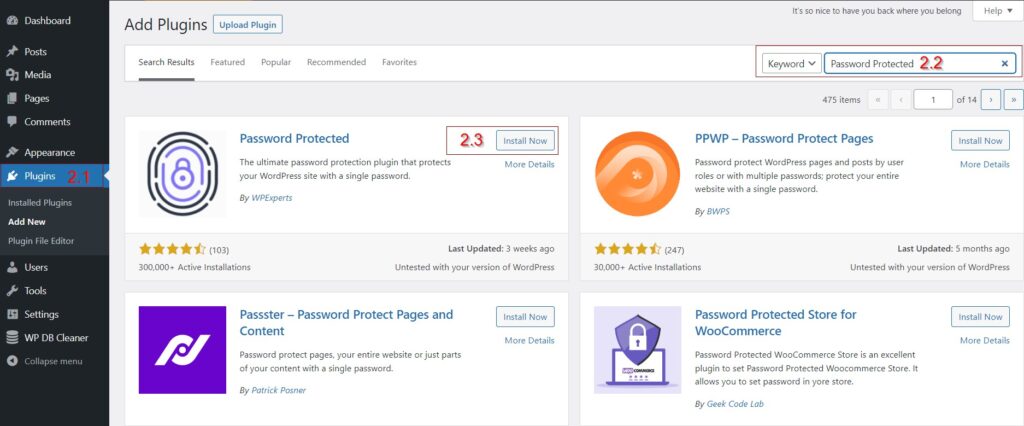

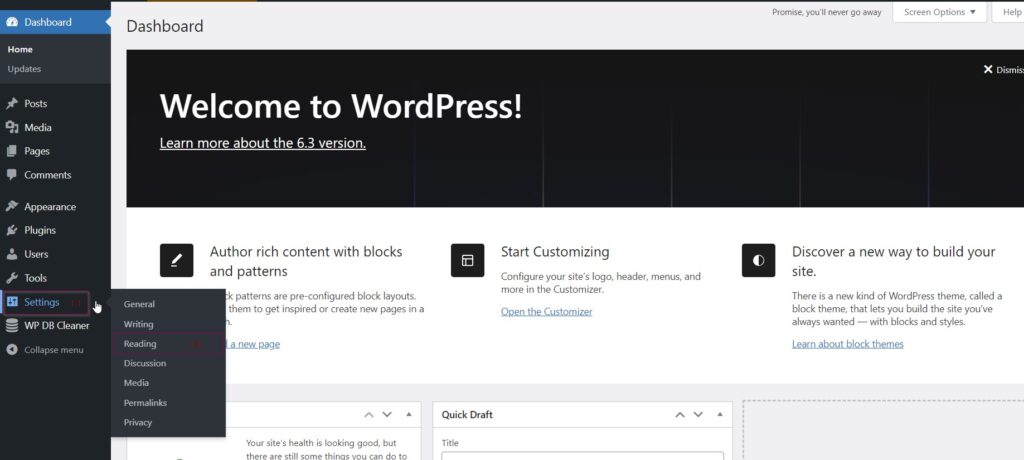

1. Go to Plugins(1.1)

2. Click Add Plugin (2.1) and Write “Password Protected” (2.2 ) in the search field, and install the plugin (2.3)

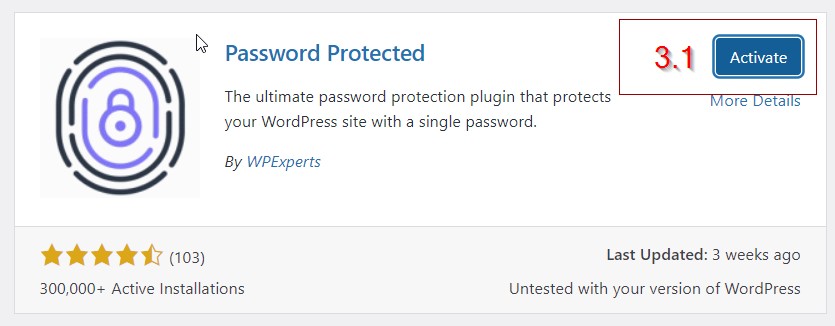

3. Activate the plugin (3.1)

After installing and activating the plugin, go to Settings → Password Protected for customization.

Of course, you can use other things with WordPress which is why using WordPress is better than Custom CMS.

Block Crawling

Blocking crawling with the robots.txt file prevents Googlebot from accessing your website.

How to hide a website from crawling using robots.txt

The robots.txt file contains instructions for search engine crawlers, such as which parts of the web resource to scan and which to ignore.

First, add commands to the file. There are two of them:

1. User-agent refers to the type of the bot to be restricted, such as Googlebot or Bingbot.

2. Disallow deals with the limitation of the bots.

If you want to prevent Google’s bot from crawling on a specific folder of your website, you should enter the following command:

User-agent: Googlebot Disallow: /example-subfolder/

You can also block the search bots from crawling on a specific web page.

User-agent: Googlebot Disallow: /serives/webdesign/

If you want to disallow all search engine bots from accessing your website via the robots.txt file, use an asterisk (*) next to User-agent and a slash (/) next to Disallow as shown below:

User-agent: * Disallow: /

You can customize configurations for various search engines by adding multiple commands to the file. Remember to save the robots.txt file to apply the changes.

Block Indexing

Add a noindex robots meta tag to your pages to use block indexing.

There are two ways to apply the noindex rule:

1. as a <meta> tag;

2. as an HTTP response header.

Both methods are applicable. Choose the most convenient way for your website and the appropriate content type. However, keep in mind that Google does not support the noindex rule in the robots.txt file. Still, you can use the noindex rule and other rules, such as a nofollow hint, simultaneously for search engine indexing using <meta name= “robots” content= “noindex, nofollow”/>.

Hide your website from search engines using meta tags

To block search engines page indexing, use the <meta> tag with the noindex rule in the <head> section.

<meta name="robots" content="noindex">

To block Google web crawlers indexing of a particular page, enter the following:

<meta name="googlebot" content="noindex">

Instead of using a <meta> tag, enter noindex or none in the X-Robots-Tag HTTP header. You can also prevent indexing non-HTML resources like PDFs, videos and images. For instance, the following HTTP response includes an X-Robots-Tag header that instructs search engines to noindex a page:

HTTP/1.1 200 OK (...) X-Robots-Tag: noindex (...)

How to noindex a page in WordPress?

The following steps will guide you on how to noindex a page in a wordpress website during its development using the WordPress admin panel:

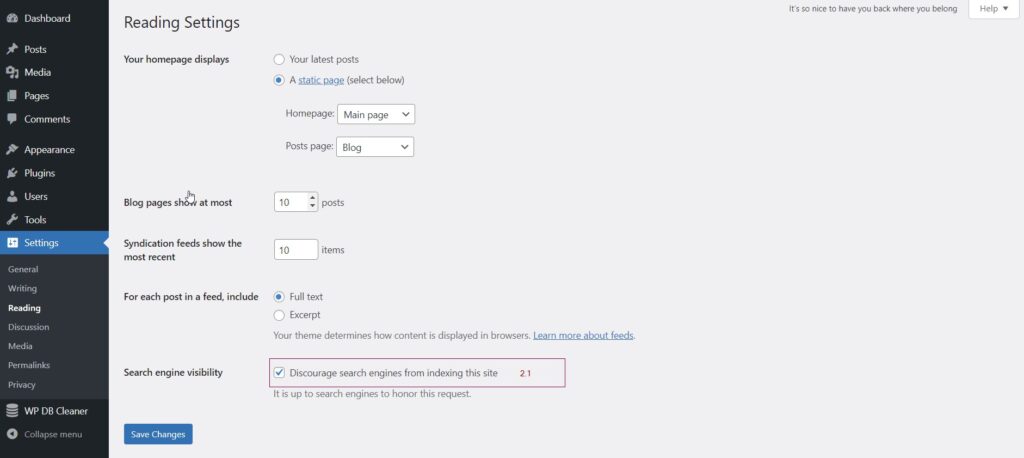

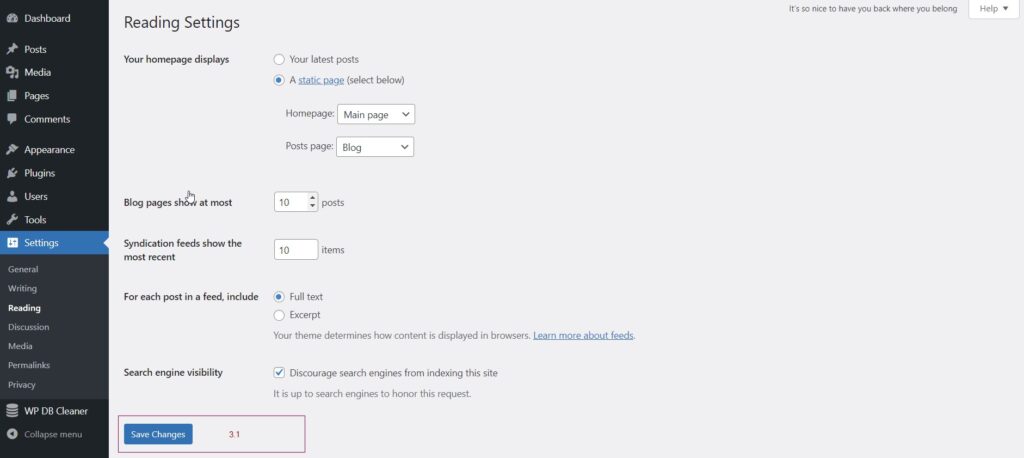

1. Go to Settings (1.1) -> Reading (1.2)

2. Select the ” Discourage search engines from indexing this site”(2.1) option to turn off search engine indexing

3. Click the “Save Changes” button.

Conclusion

I hope this tutorial will help hide your website or a specific page from search engine indexing or users. However, password protection is still the most reliable and secure method when restricting access to the website. Remember always to check the content you’re going to index since wrong website content indexing will negatively influence your SEO. If you have any questions about web development or SEO, please feel free to contact us.